My current company records sensitive client data, including identifiers such as names, addresses and other personally identifiable information. It is then very important for us to keep security in mind in the whole system. Our product is a web application that is accessed through three-factor authentication. We, of course use SSL/TLS to secure the data exchange with end to end encryption. But as sensitive as the data is, we need to make sure we provide enough security layers to make sure any data trasnfer is secure. We are currently developing using Laravel 5.5, the last LTS laravel version, so the code bits of this article will be Laravel-based. However, the concepts are applicable to any environment with a similar problem and structure.

Structure

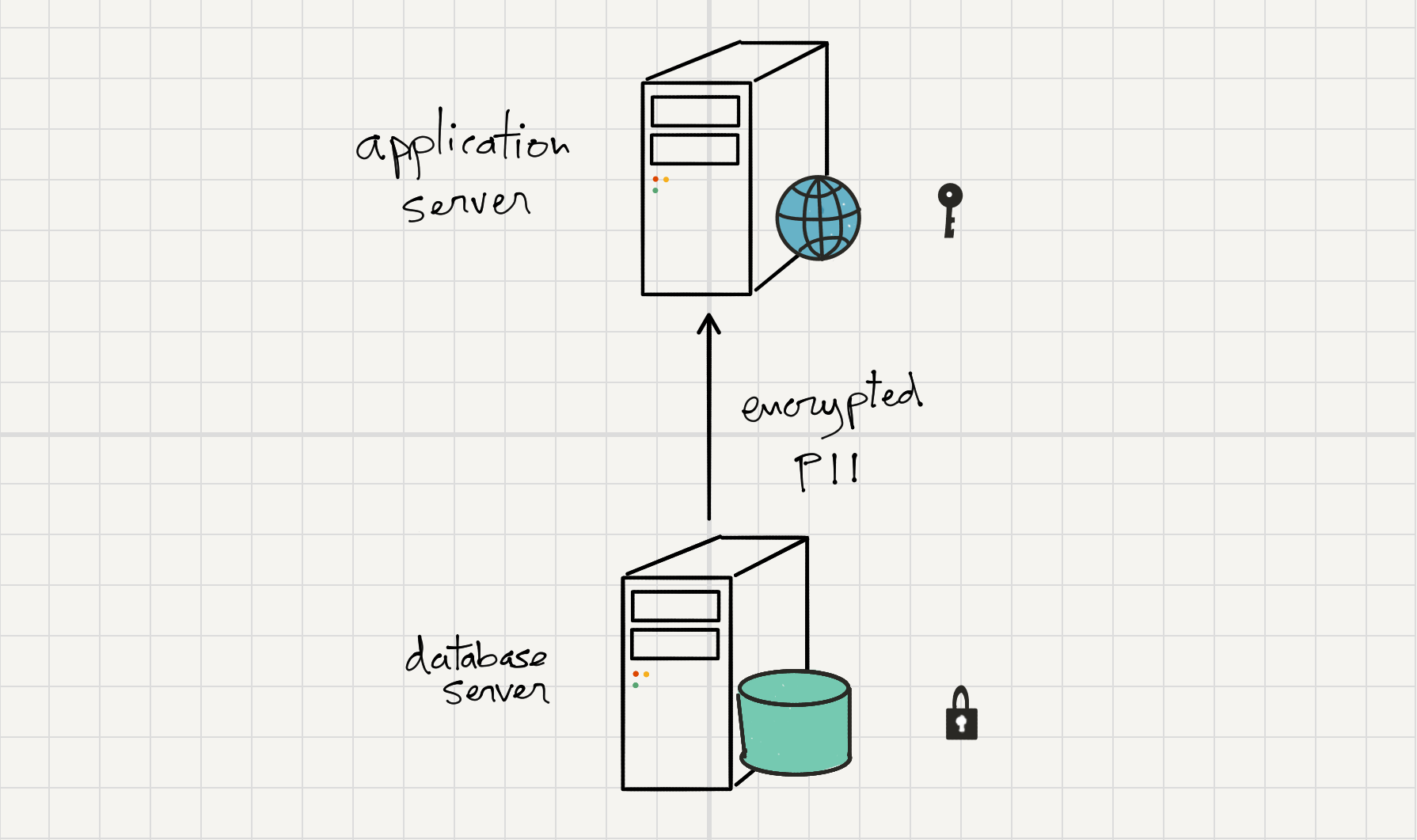

Our database and application servers are physical servers, racked in the same data center and network but separate machines. We would like to be able to protect our client's data in case the database server was compromised but not the application server. That said, it makes sense to encrypt the sensitive information fields on DB, as long as the DB server doesn't have access to the encryption keys. Lastly, the system needs to support searching, as users need to be able to search for a client's name, surname, etc.

Before we start

Before we get to the matter, let's briefly explain some cryptograpy concepts:

Encrypting is the practice of encoding information in a way that only someone with a corresponding key can decode and read it. Encryption is a two-way function. When some information is encrypted, it is supposed to be decrypted at some point.

Hashing is the practice of using an algorithm to map data of any size to a fixed length. It can be used to index non-numerical data. Hashing is a one-way function. When some information is hashed, it is not supposed to be 'un-hashed'. While it’s technically possible to reverse-hash something, it would involve brute force re-engineering so the computing power required makes it unfeasible.

Salting is a concept that typically pertains to password hashing, although it can also apply to encryption. Essentially, it’s a unique value that can be added to the end of the original text to create a different hash value. This adds a layer of security to the hashing process, specifically against brute force attacks.

Implementing encryption of DB fields in Laravel

We're going to use the Laravel Crypt façade, that uses AES-256 encryption by default. Secure encryption schemes, like AES, are designed so that encrypting the same value twice generates different encrypted values. That way, the encryption is non-deterministic and thus secure against an evesdropping attack. Encrypted values are longer than their plain text counterparts so in order to store the encrypted data, we are going to use text fields on DB.

In order to access DB fields, we can override the default accessors and mutators of a Laravel (Eloquent) model to decrypt the value when accessing and encrypt it before persisting. This can be developed in a trait, so it can be reused in any model:

<?php

// ...

use Illuminate\Support\Facades\Crypt;

trait Encryptable

{

public function getAttribute($key)

{

$value = parent::getAttribute($key);

if ($value !== null && in_array($key, $this->encrypted))

{

$value = Crypt::decrypt($value);

}

return $value;

}

public function setAttribute($key, $value)

{

if (in_array($key, $this->encrypted))

{

$value = Crypt::encrypt($value);

}

return parent::setAttribute($key, $value);

}

// ...

If we include the property $this->encrypted in our model, containing the names of the encrypted properties we can, with this simple trait, acomplish the encryption/decryption of database fields.

Searchable encryption

However, as the encryption scheme is non-deterministic, searching is not possible as if we encrypt the filtering fields provided on the searching action, it would generate a different encrypted string with which comparison would make no sense. So in order to be able to search, we need to add an index for every encrypted column.

The idea is to store a keyed hash (rather than a salted hash) of the unencrypted value in a separate column to use as an index. It is important that the hash key is different from the encryption key and unknown to the database server.

The following code examples assume that every encrypted field on the model, will have a corresponding index, named in the same way but with the string '_index' added at the end.

Let's consider the following model:

CREATE TABLE `clients` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`forename` text,

`surname` text,

`forename_index` varchar(64),

`surname_index` varchar(64),

`created_at` timestamp NULL DEFAULT NULL,

`updated_at` timestamp NULL DEFAULT NULL,

PRIMARY KEY (`id`)

);The migration up() function would look like:

public function up()

{

Schema::create('clients', function (Blueprint $table) {

$table->increments('id');

$table->text('forename');

$table->text('surname');

$table->string('forename_index');

$table->string('surname_index');

$table->timestamps;

});

}We need to modify our trait to include the functionality to store the index:

<?php

namespace App;

use Illuminate\Support\Facades\Crypt;

use Illuminate\Support\Str;

// ...

trait Encryptable

{

public function getAttribute($key)

{

$value = parent::getAttribute($key);

if ($value !== null && in_array($key, $this->encrypted))

{

$value = Crypt::decrypt($value);

}

return $value;

}

public function setAttribute($key, $value)

{

if (Str::endsWith($key, "_index"))

return $this;

if (in_array($key, $this->encrypted))

{

parent::setAttribute("{$key}_index", $this->hash($value));

$value = Crypt::encrypt($value);

}

return parent::setAttribute($key, $value);

}

public function attributesToArray()

{

$attributes = parent::attributesToArray();

foreach ($attributes as $key => $value)

{

// if the attribute is an index, remove it from the. attributes list.

if (Str::endsWith($key, "_index"))

{

unset($attributes[$key]);

}

else

{

if (in_array($key, $this->encrypted))

{

if($value)

{

$attributes[$key] = Crypt::decrypt($value);

}

}

}

}

return $attributes;

}

// hash function to create the index. This is not production-quality code.

public function hash($value)

{

return hash_hmac("sha256", $value, base64_decode("2C83ZwVdGPgMUi8Z16MvGmjeaSXz3HpYuHWuW7sR9ZY="));

}

// ...

}Our eloquent model would look like the following:

<?php

namespace App;

use App\Encryptable;

use Illuminate\Database\Eloquent\Model;

class Client extends Model

{

use Encryptable;

protected $encrypted = ['forename', 'surname'];

}

So if we input the data below, the DB content would result as follows:

[

{

"forename": "John",

"surname": "Reynolds"

}

][

{

"forename": "eyJpdiI6IjlIbzcrWHFvUHdSZkhndUp3M2Zoa2c9PSIsInZhbHVlIjoiSlg4aFlXcWg3bGZxcXdVVlowdnVkUT09IiwibWFjIjoiYTA0MzU0YzZlNWNkYzhkMWJlNTQ1MjUzOThiMGFjMmRlODRmYmIxZmM4MjQzMTQ4OTUzM2JkNDg4ZDM4ZjU5YyJ9",

"surname": "eyJpdiI6IlRJNDN0a2U1TUp3VkJqZGFVY0gyb3c9PSIsInZhbHVlIjoiZXhVKzBLMXdOQWdcL1ZNK1JEVEZqUXc9PSIsIm1hYyI6IjlmZGI2N2Q0ZmEzY2Q2MzFjZTdmZjZjZmMyODM4ZWZlMjkzMGUxMDBiM2NiMTg1YWQ5YmRmNmNiMTFlNjI5ODIifQ==",

"forename_index": "5d81f6bac397a44b58bdae00a185cb1fa844ed2ff9a14efe0805a2b22b3ebcbb",

"surname_index": "a12afb44b0845a481c705a1c990d43c52d7a51e384c8a72665d0036d614ca6c6"

}

]Lastly, when a controller action wants to filter models depending on one or more encrypted fields, we need to make sure that the same hash function is applied to the requested fields and use the hashed index instead of the raw field.

We can achieve this in Laravel by overriding the default eloquent query builder that our model uses. First, we need to extend the eloquent query builder:

<?php

namespace App;

use Illuminate\Database\Eloquent\Builder as EloquentBuilder;

class EncryptableQueryBuilder extends EloquentBuilder{

protected $encrypted = [];

protected $hashFunction;

public function __construct($query, $encrypted, $hashFunction)

{

$this->encrypted = $encrypted;

$this->hashFunction = $hashFunction;

parent::__construct($query);

}

protected function hash($value)

{

$hash = $this->hashFunction;

return $hash($value);

}

/**

* Add a basic where clause to the query.

*

* @param string|array|\Closure $column

* @param mixed $operator

* @param mixed $value

* @param string $boolean

* @return $this

*/

public function where($column, $operator = null, $value = null, $boolean = 'and')

{

$encrypted = $this->encrypted;

$function = function ($item) use ($encrypted){

if (in_array($item[0], $encrypted))

{

return ["{$item[0]}_index", $item[1], $this->hash($item[2])];

}

return $item;

};

if (is_array($column))

{

$arrayColumn = [];

foreach ($column as $key => $val) {

if (is_numeric($key) && is_array($val)) {

$arrayColumn[$key] = array_map($function, $val);

} else {

if (in_array($key, $encrypted))

{

$arrayColumn["{$key}_index"] = $this->hash($val);

}

else

{

$arrayColumn[$key] = $val;

}

}

}

return parent::where($arrayColumn, $operator, $value, $boolean);

}

if (in_array($column, $encrypted))

{

[$value, $operator] = $this->prepareValueAndOperator(

$value, $operator, func_num_args() === 2

);

$column .= "_index";

$value = $this->hash($value);

}

return parent::where($column, $operator, $value, $boolean);

}

}

and finally, include the following function in the Encryptable trait, to override the default model one:

<?php

namespace App;

use Illuminate\Support\Facades\Crypt;

use Illuminate\Support\Str;

use App\EncryptableQueryBuilder;

trait Encryptable

{

// Rest of the trait code

public function newEloquentBuilder($query)

{

$hashFunction = function ($value) {

return $this->hash($value);

};

return new EncryptableQueryBuilder($query, $this->encrypted, $hashFunction);

}

}And that's it! Now, we can filter using plain data directly on the on the controller action, so if define our controller action in the laravel web routes file:

<?php

// ...

Route::get('clients/search', function(Request $request){

return Client::where($request->all())->get();

});

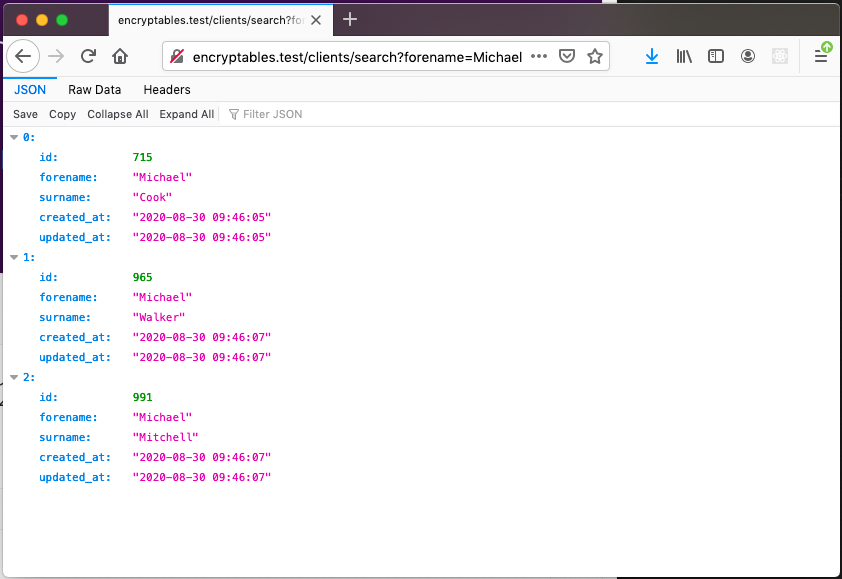

We will get the desired data without the need of manually hashing and indexing the filtering fields:

Conclusions and furtherwork

Given our example and the encryption and hashing key are different and stored in the application server, and the database can't access them, then an attacker that compromises the database server only will be able to learn if clients have the same name or surname but not what the name is. This multiple entry leak is necessary in order for indexing to be possible, which could be a risk for common names in this example. It could also reveal if two seemingly unrelated people in the DB share the same address, the same surname and other data that reveal that those people belong to the same family.

Also this approach has the limitation of only working for exact matches. If two strings differ in a meaningless way (for example the letter case) it will always generate a different hash so would never find a match. Names and surnames can also include particles or characters (de, de la, O', Mac) that should be ignored for search. For example, if we're searching the surname O'Brian, it should be found by "O'brian", "Obrian", "OBrian". If we are searching "de León", it should be found by "de Leon", "Leon", "león", etc.

A first solution to this could be process the name index before being hashed to perform trasnformation to lower-case, remove particles and accents, map non-standard-ASCII latin characters to its corresponding ASCII (ñ, ß, õ, å, ü to n, ss, o, a, u), etc. I'd like to investigate how useful it would be to combine this with the use of the levenstein function in PHP as well as soundex or metaphone to create a more. It could help us implement a fuzzier name search.